Get default metrics for quantile-based forecasts

Source:R/class-forecast-quantile.R

get_metrics.forecast_quantile.RdFor quantile-based forecasts, the default scoring rules are:

"wis" =

wis()"overprediction" =

overprediction_quantile()"underprediction" =

underprediction_quantile()"dispersion" =

dispersion_quantile()"bias" =

bias_quantile()"interval_coverage_50" =

interval_coverage()"interval_coverage_90" = purrr::partial( interval_coverage, interval_range = 90 )

"ae_median" =

ae_median_quantile()

Note: The interval_coverage_90 scoring rule is created by modifying

interval_coverage(), making use of the function purrr::partial().

This construct allows the function to deal with arbitrary arguments in ...,

while making sure that only those that interval_coverage() can

accept get passed on to it. interval_range = 90 is set in the function

definition, as passing an argument interval_range = 90 to score() would

mean it would also get passed to interval_coverage_50.

Usage

# S3 method for class 'forecast_quantile'

get_metrics(x, select = NULL, exclude = NULL, ...)Arguments

- x

A forecast object (a validated data.table with predicted and observed values, see

as_forecast_binary()).- select

A character vector of scoring rules to select from the list. If

selectisNULL(the default), all possible scoring rules are returned.- exclude

A character vector of scoring rules to exclude from the list. If

selectis notNULL, this argument is ignored.- ...

unused

See also

Other get_metrics functions:

get_metrics(),

get_metrics.forecast_binary(),

get_metrics.forecast_nominal(),

get_metrics.forecast_ordinal(),

get_metrics.forecast_point(),

get_metrics.forecast_sample(),

get_metrics.scores()

Examples

get_metrics(example_quantile, select = "wis")

#> $wis

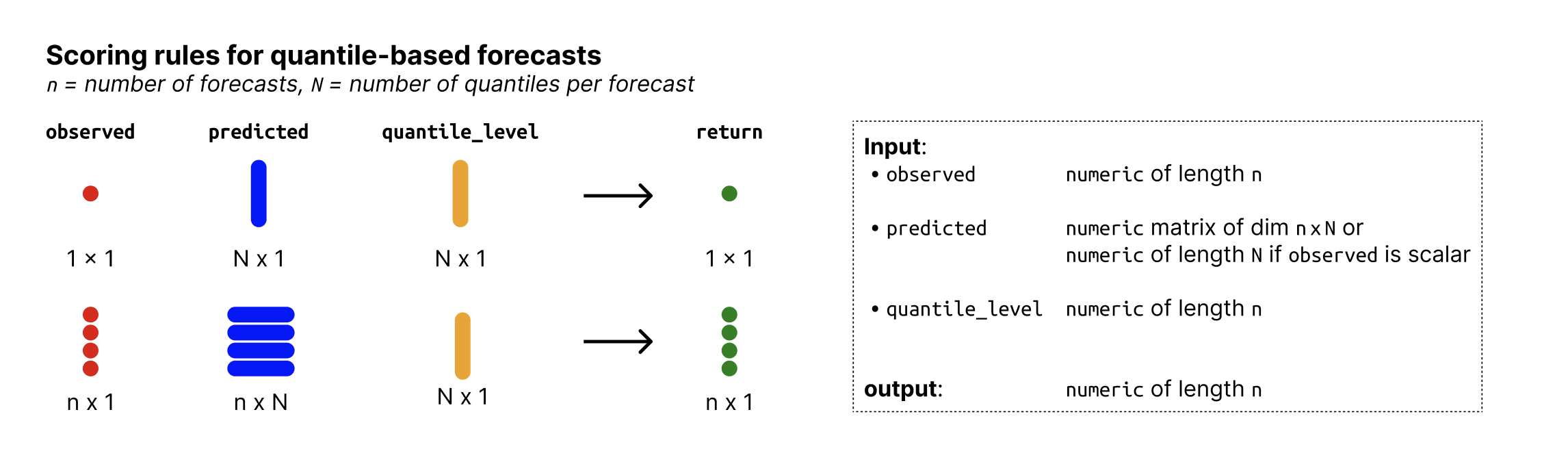

#> function (observed, predicted, quantile_level, separate_results = FALSE,

#> weigh = TRUE, count_median_twice = FALSE, na.rm = FALSE)

#> {

#> assert_input_quantile(observed, predicted, quantile_level)

#> reformatted <- quantile_to_interval(observed, predicted,

#> quantile_level)

#> interval_ranges <- get_range_from_quantile(quantile_level[quantile_level !=

#> 0.5])

#> complete_intervals <- duplicated(interval_ranges) | duplicated(interval_ranges,

#> fromLast = TRUE)

#> if (!all(complete_intervals) && !isTRUE(na.rm)) {

#> incomplete <- quantile_level[quantile_level != 0.5][!complete_intervals]

#> cli_abort(c(`!` = "Not all quantile levels specified form symmetric prediction\n intervals.\n The following quantile levels miss a corresponding lower/upper bound:\n {.val {incomplete}}.\n You can drop incomplete prediction intervals using `na.rm = TRUE`."))

#> }

#> assert_logical(separate_results, len = 1)

#> assert_logical(weigh, len = 1)

#> assert_logical(count_median_twice, len = 1)

#> assert_logical(na.rm, len = 1)

#> if (separate_results) {

#> cols <- c("wis", "dispersion", "underprediction", "overprediction")

#> }

#> else {

#> cols <- "wis"

#> }

#> reformatted[, `:=`(eval(cols), do.call(interval_score, list(observed = observed,

#> lower = lower, upper = upper, interval_range = interval_range,

#> weigh = weigh, separate_results = separate_results)))]

#> if (count_median_twice) {

#> reformatted[, `:=`(weight, 1)]

#> }

#> else {

#> reformatted[, `:=`(weight, ifelse(interval_range == 0,

#> 0.5, 1))]

#> }

#> reformatted <- reformatted[, lapply(.SD, weighted.mean, na.rm = na.rm,

#> w = weight), by = "forecast_id", .SDcols = colnames(reformatted) %like%

#> paste(cols, collapse = "|")]

#> if (separate_results) {

#> return(list(wis = reformatted$wis, dispersion = reformatted$dispersion,

#> underprediction = reformatted$underprediction, overprediction = reformatted$overprediction))

#> }

#> else {

#> return(reformatted$wis)

#> }

#> }

#> <bytecode: 0x556609cfd1d0>

#> <environment: namespace:scoringutils>

#>